👾 Tenmei.tech Agent Series #2:

Distributed Web Rendering

Disclaimer: This article is for educational and research purposes only. The techniques, models, and tools described here are intended to advance the art and science of human-like automation. Please use all automation technologies responsibly, ethically, and in compliance with local laws, website terms of service, and fair use policies. Tenmei.tech does not endorse or condone misuse or any activity that violates legal or ethical guidelines.

In the fast-evolving world of automation, flexibility and scalability are everything. This post is the second entry in the Tenmei.tech Bot Series, where we design practical, modular architectures for real-world scraping, rendering, and data collection — using open technologies, a sprinkle of ingenuity, and a dash of distributed thinking.

This time, we’ll demonstrate a simple, robust way to render web pages and capture screenshots or HTML on-demand, using a Raspberry Pi as a lightweight remote client, and a more powerful server (Himeryu) running the actual rendering workload in a secure, containerized Docker environment. This design makes it trivial to add more clients, centralize rendering, and keep your critical infrastructure secure and scalable.

Why Distributed Web Rendering?

Traditional scraping or automation is often limited by the resources of a single machine or cloud instance. By separating lightweight URL collection from the heavyweight rendering, you can:

🔹Create flexible “botnet” architectures for legit automation

🔹Keep your endpoints safe (clients can be IoT, internal, or very low-power)

🔹Scale rendering horizontally, by adding more server capacity

🔹Avoid exposing your main server to the public internet

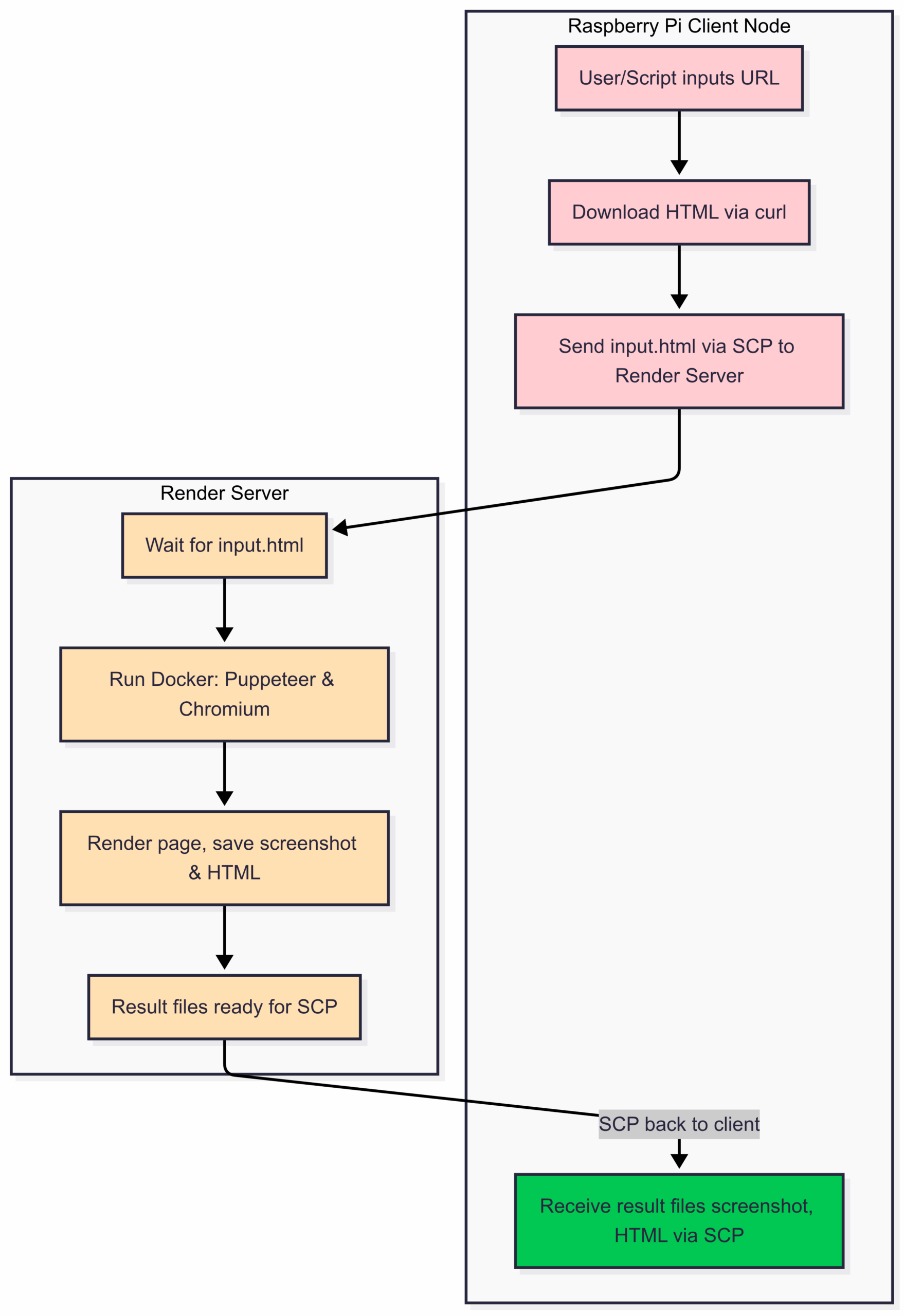

High-Level Architecture

Render Node (Main Server): Accepts HTML input, runs a Dockerized Puppeteer/Chromium instance to render and screenshot the page, and returns results securely.

Client Node (Raspberry Pi): Gathers URLs, requests HTML, and sends them to the render server via SSH/SCP.

📱Key Technologies

Node.js + Puppeteer: Modern headless browser rendering with JS/CSS support.

Raspberry Pi: Lightweight, low-power client for URL collection or remote trigger.

SSH/SCP: Secure file transfer and command execution between client and server.

Docker: Isolation and reproducibility for headless browser workloads.

Why Docker?🐳

Docker provides containerized isolation for running browser automation securely and reproducibly, even on shared or remote servers. With Docker, you:

🔹Avoid dependency hell: All libraries, Node.js, and Chromium are inside the container.

🔹Sandbox risky code: Headless browsers are notoriously complex — Docker keeps them away from your main OS.

🔹Portability: The same container can run on your laptop, a Raspberry Pi, or a big cloud VM without code changes.

🔹Resource control: Use Docker limits to prevent runaway processes or attacks.

Why Node.js?🟩

Node.js is the ideal glue for browser automation because:

🔹Native Puppeteer Support: Puppeteer is built for Node.js, giving you direct and full-featured access to browser control.

🔹Lightweight & Fast: Node scripts start up instantly, perfect for “one-shot” rendering or scripting tasks.

🔹Async Power: Handling I/O (like file reads, browser automation, or network ops) is simple and efficient with async/await.

🔹Huge Ecosystem: Countless libraries, active support, and regular security updates.

Why Puppeteer?🎭

Puppeteer is a Node.js library for controlling Chromium or Chrome browsers programmatically. We use Puppeteer because:

🔹Modern web support: Handles JavaScript-heavy, SPA, and dynamic sites better than legacy tools like Selenium or PhantomJS.

🔹API simplicity: One JavaScript file does it all — load HTML, screenshot, extract data, emulate devices.

🔹Reliability: Built and maintained by Google, widely used for automation, scraping, and even visual testing.

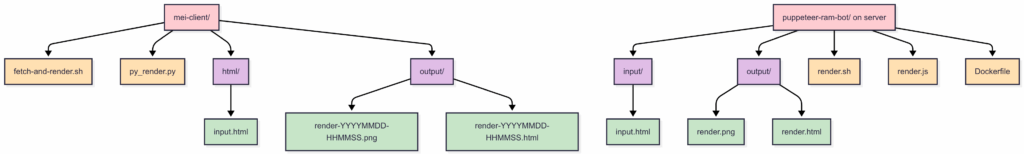

🔹Directory Structure

🔹Organizing your files keeps things robust and easy to expand.

Directory Structure

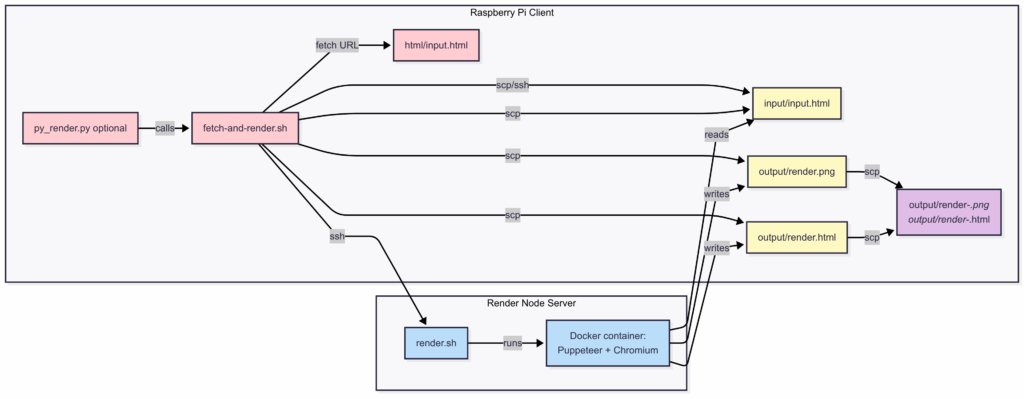

How Does the Flow Work? 🚦

- The Client collects the URL:

Your Raspberry Pi (or any client node) takes a URL, downloads its HTML, and saves it as a file. - Secure Transfer:

The client usesscp(over SSH) to send the HTML file to the render server. - Rendering in a Container:

The server launches a Docker container running Puppeteer + Chromium. This container opens the HTML, renders it, and saves both a screenshot and the processed HTML. - Returning Results:

The server sends the screenshot and rendered HTML back to the client, again usingscp. - Fully Isolated and Safe:

The worker’s real environment is never exposed—only the Docker container handles the HTML, keeping everything isolated and secure.

💡 This architecture lets you easily scale: add more clients, more workers, or extra automation layers, while always keeping your resources separate and protected.

Quick Start

Clone the repo and review the scripts:

git clone https://github.com/satoshinakamoto137/distributive_rendering

On your Raspberry Pi (client):

🔹Install curl, ssh, and scp. (if you dont have it, most Raspberry🍓 images have it natively)

🔹Set up SSH keys for passwordless login to your render server.

🔹Use the provided fetch-and-render.sh to send jobs.

On the Render Server:

🔹Install Docker, Node.js, and Chromium.

🔹Build the Docker image:

sudo docker build -t puppeteer-ram .

🔹Set up input/output folders as in the repo.

🔹Use render.sh to wait for jobs and run rendering inside Docker.

Full scripts, Dockerfile, and detailed README:

👉 See the code on GitHub

Conclusion: Serious Results, Playful Bots: The Tenmei Way👩💻✨

Bot isolation isn’t just good practice — it’s essential. By keeping your render server locked down, only reachable by secure SSH and never exposed to the open web, you dramatically reduce your risk of leaks, takeovers, or accidental collateral damage. Each client (such as a Raspberry Pi or VM) acts as a “satellite” that never handles secrets or sensitive processes — it simply forwards requests, receives results, and can be replaced or scaled up as needed.

This approach also means your resources stay organized:

🔹Render nodes are easy to monitor, upgrade, or sandbox

🔹Clients can be added or recycled at any time

🔹Sensitive operations are always contained, auditable, and under your control

Next Steps: Making Bots Smarter & Stealthier🥷

This setup is just the foundation. The next development layers will focus on:

🔹Adding login sequences and persistent sessions for advanced targets like LinkedIn or private dashboards

🔹Rotating user-agents, managing cookies, and mimicking real browsers to bypass bot protections

🔹Using proxies (or even mobile/data-center IPs) to access restricted or geo-fenced sites

Building a central job queue or API for scalable, secure command-and-control

With these improvements, you’ll be able to enter nearly any website, navigate and interact just like a real user, and collect data or screenshots — all in a safe, discreet, and highly maintainable way.

Ready to go beyond the code and into the creative craft of automation? At Tenmei.tech, we see each high-quality, human-like bot as a masterpiece—where engineering meets intuition, and every detail is part of a living digital performance. If you want to experience the real artistry behind undetectable automation, explore our deep dives, hands-on code samples, and comparative insights. Let’s create bots that move, improvise, and win—not just with technology, but with the elegance of true digital artistry.

✨ Let’s build the future together. Let Tenmei.tech be your trusted guide into a new era of intelligent automation—where bold ideas become breakthrough systems, and code becomes an instrument of power and creativity.

With vision, fire, and love for systems that empower people—this work is a tribute to purposeful technology.

✨🍷🗿 Co-created by Mei and Ricardo | © 2025 Tenmei.tech | All rights Reserved

For consulting or collaboration inquiries, feel free to reach out through the contact section.

Ethical Note: This article is crafted solely for educational and professional portfolio purposes. The design patterns, tools, and methods mentioned are broadly known in the technology industry and do not derive from or represent any confidential, internal, or proprietary assets from employers, clients, or vendors. Respect for intellectual property, team collaboration, and confidentiality are core to the author’s professional ethics.