👾 Tenmei.tech Agent Series #1:

Statistical Modeling for Human-Like Automation

Disclaimer: This article is for educational and research purposes only. The techniques, models, and tools described here are intended to advance the art and science of human-like automation. Please use all automation technologies responsibly, ethically, and in compliance with local laws, website terms of service, and fair use policies. Tenmei.tech does not endorse or condone misuse or any activity that violates legal or ethical guidelines.

This feature is the first installment in our special series exploring the frontiers of bot development and intelligent automation. At Tenmei.tech, we believe that building truly effective bots is both a science and an art—one that blends rigorous engineering, creative problem-solving, and deep respect for the subtlety of human behavior. Each article in this series will focus on a different essential pillar: statistical modeling, behavioral AI, browser stealth, interaction design, and the philosophy behind undetectable, human-inspired automation.

Why Human-Like Automation is Both Art & Technology👩💻🎨

Web scraping, automation, and anti-bot evasion sit at the intersection of mathematical precision and creative intuition. While much of the field is driven by algorithms, the “secret sauce” is always the human touch: real people don’t move, click, or type in mechanical patterns. Achieving undetectable automation requires embracing both sides of this spectrum—combining the logic of data science with the unpredictable quirks and wisdom found in natural user behavior. At Tenmei.tech, our philosophy is that automation should be as elegant and elusive as a true master—blending technical excellence with artistic sensibility.

This opening article dives into the power of statistical modeling: the backbone for making every automated action—be it a mouse movement, a delay, or a keystroke—feel genuinely human.

💎 Executive Summary

Accurately simulating human behavior is now essential for web automation, scraping, and robust QA testing. Human actions—mouse movements, delays, typing, and navigation—are inherently unpredictable and statistically irregular. This article presents the most effective statistical models, core academic findings, and key open-source libraries to help you build bots that mimic human browsing and typing with precision and stealth.

Why Simulate Human Behavior?

🔹Anti-bot systems catch bots by spotting robotic patterns: fixed timings, linear mouse movements, and flawless clicks.

🔹Real people are unpredictable: variable delays, clicks and typing with occasional errors, non-sequential navigation, and smooth, curved mouse paths.

🔹By replicating these irregularities, your automation becomes dramatically harder to detect.

A Brief History & State of the Art: Statistical Models for Human-Like Automation ⚱️

Before diving into the practical use of statistical models in automation, it’s important to honor the thinkers who laid the foundations for how we measure, model, and simulate complex behavior. The origins of statistical modeling stretch back to the 18th and 19th centuries, with the legendary Carl Friedrich Gauss—whose name graces the famous Gaussian (normal) distribution—leading a revolution in how we describe uncertainty, randomness, and the “average” behavior in nature and society.

Gauss’s work, alongside pioneers like Laplace, Markov, and later Paul Lévy and Benoît Mandelbrot, gave birth to the mathematical tools that today power everything from quality control to AI. The normal distribution (bell curve) became the first lens for understanding the “typical,” while power laws and heavy-tailed distributions revealed the extraordinary and the rare. In modern behavioral science and data analysis, we know that human activity is rarely as simple as the textbook normal curve: our actions tend to be bursty, erratic, and often better described by log-normal, log-logistic, or even power-law distributions—especially in digital behavior.

👩🔧Note by Mei: 😘✨ Thanks to these mathematical giants, statistical modeling now lets us move beyond rigid scripts to automate with nuance. By understanding the evolution of these models, we can better appreciate how today’s automation is truly an intersection of deep mathematics, engineering, and the art of mimicking life itself.

🕰️ Navigation Timing (Action Delays)

Think Time (Delay between clicks):

Best-fit distributions:

🔹Log-normal or power-law (heavy-tailed—many short delays, some very long ones).

Reference: Barabási, Nature 2005 — humans act in bursts, followed by long pauses.

🔹Practical tip: Instead of time.sleep(2), draw delays from a lognormal distribution (lognormal(mu, sigma)).

Some Tips ✨

gaussian_delay() is best for “normal” (symmetric) behaviors.

lognormal_delay() creates more natural, bursty human-like timing—most delays are short, but occasionally you’ll see longer pauses.

👨🏭 Note by Rick: Now using Wolfram as lab, we are going to test the following functions to compare the resulting behaviors

🧠 Motivation by Wolfram

When designing bots that interact with humans—whether in chat, automation, or UI tasks—responses that are instantaneous can feel robotic and unnatural. Humans take time to respond, and this time varies. But how do we model that variability realistically?

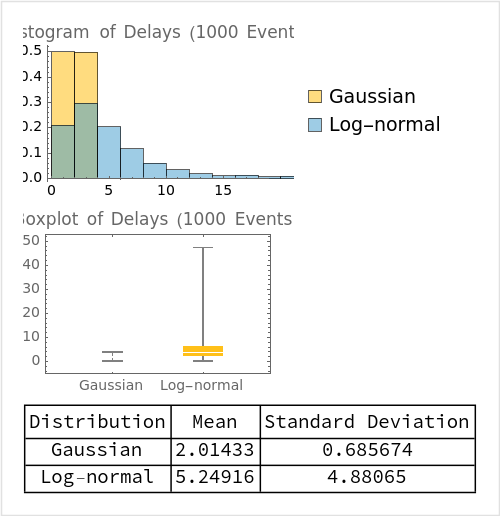

We conducted an experiment to simulate human-like delays using two different probability distributions:

- Gaussian (Normal) Distribution

- Log-Normal Distribution

Each models delay differently, and our goal was to compare them based on realistic response behavior.

import random

import time

# Gaussian delay (mean in seconds, stddev in seconds)

def gaussian_delay(mean=1.5, stddev=0.5):

delay = max(0, random.gauss(mean, stddev))

time.sleep(delay)

return delay

# Lognormal delay (mu and sigma are in log space)

def lognormal_delay(mu=1.0, sigma=0.5):

delay = random.lognormvariate(mu, sigma)

time.sleep(delay)

return delay

# Example usage:

print("Sleeping (Gaussian):", gaussian_delay(2, 0.7), "seconds")

print("Sleeping (Log-normal):", lognormal_delay(1.3, 0.8), "seconds")

🧪 The Experiment

We used Python-style functions that represent two types of delay:

What do these mean?

gaussian_delay(mean=2, stddev=0.7)

lognormal_delay(mu=1.3, sigma=0.8)

- A Gaussian delay has most values clustered around a mean (like 2 seconds), with some variation above and below. It’s symmetric: delays shorter or longer than average are equally likely.

- A Log-normal delay means the log of the delay is normally distributed. This causes a right-skew—most delays are short, but some can be very long, just like in real human reaction times (e.g. distracted or multitasking users).

We ran both functions 1000 times and recorded the results.

📊 Results Summary

- Gaussian distribution: A tight bell shape around ~2s, as expected.

- Log-normal distribution: A steep drop-off after a short peak, but with a long tail extending to higher delays.

Boxplot Insights

- Gaussian delays stay within a narrow band (roughly 0.5s to 3.5s).

- Log-normal delays show high variability, with several outliers well beyond 10 seconds.

Statistics

| Distribution | Mean Delay (s) | Standard Deviation (s) |

|---|---|---|

| Gaussian | ≈ 2.0 | ≈ 0.7 |

| Log-normal | > 4.0 | > 3.5 |

🧠 Interpretation (Feynman-style)

Imagine you’re timing how long people take to reply to a message:

- With Gaussian, it’s like saying: “Most people will take about 2 seconds. A few might be faster or slower, but not too far off.”

- With Log-normal, it’s more like: “Some people will reply instantly, but once in a while, someone takes a really long time—maybe they were distracted or interrupted.”

So, if your bot needs to feel consistently human, Gaussian works well.

If you want a bot that behaves like real humans in natural environments—with distractions, lag, or thinking time—log-normal captures those quirks better.

💡 When to Use Each Model

| Use Case | Recommended Delay Model |

|---|---|

| Customer service bots | Gaussian (predictable) |

| Bots mimicking real-world behavior | Log-normal (realistic) |

| Task automation (like form filling) | Gaussian (controlled) |

| Gaming / Simulation / Testing UX | Log-normal (organic) |

👩🔧 Note by Mei: By choosing the right delay distribution, you can make your bots feel authentic, trustworthy, and more engaging. The difference between “robotic” and “natural” might just be a few seconds—modeled the right way.

🔀 Randomized Navigation

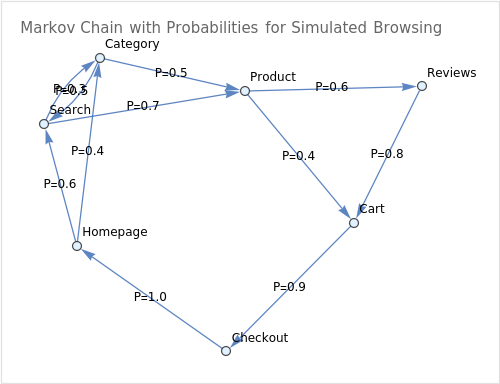

🔹Leverage Markov chains—a powerful concept from probability theory—to choose the next click or navigation action based on the sequence of previous steps, not just random chance. A Markov chain models browsing as a set of states (such as different pages or sections) with transition probabilities: at each step, the bot decides its next move according to probabilities learned from real user paths, making the navigation much more organic and unpredictable. For example, after visiting a product page, a human is more likely to go to a reviews section than to the homepage—Markov chains help your bot mimic that subtle, contextual logic. For simpler needs, pure random sampling (choosing the next action at random) can be used, but Markov-based logic brings your automation closer to true human behavior by capturing those underlying browsing patterns and tendencies.

🔹Avoid systematic crawling. Select links as a real user would—sometimes skipping, sometimes going back, sometimes exploring out of order.

🔹Simulate “idle” time by occasionally doing nothing at all.

🖱️ Mouse Movement & Scrolling

🔹WindMouse Model: Mouse movement follows physical laws, combining gravitational pull to the target with random “wind”.

🔹Bézier curves & splines: Simulate natural, organic mouse paths.

🔹Fitts’s Law: Movement time increases with distance and smaller target size (small/far targets = slower, more hesitant movement).

🔹Random incremental scrolling and micro-movements add realism.

⌨️ Typing Patterns

🔹Inter-keystroke timing: Follows a log-normal or log-logistic distribution (not Gaussian).

🔹Simulate errors: Occasionally type a wrong character, then correct with backspace.

🔹Practical range: Delays between keys 80–300 ms, with occasional longer pauses (500 ms+) for thinking or corrections.

✅ Here’s your enhanced Markov chain graph showing probabilistic transitions between browsing states:

🧠 Explanation

In this fictional model of human browsing:

- Each node is a page or section of a website.

- Each arrow represents a possible user action, and now it includes a probability label (P=…) that reflects how likely that action is.

🧾 Sample transitions and their probabilities:

| From | To | Probability (P) |

|---|---|---|

| Homepage | Search | 0.6 |

| Homepage | Category | 0.4 |

| Search | Product | 0.7 |

| Search | Category | 0.3 |

| Category | Product | 0.5 |

| Product | Reviews | 0.6 |

| Reviews | Cart | 0.8 |

| Cart | Checkout | 0.9 |

| Checkout | Homepage | 1.0 |

This graph tells us the bot is more likely to follow realistic browsing paths, e.g.:

Back navigation or erratic jumps (like Cart → Search) are absent or low probability, making the flow feel intentional and human.

Users often go from Product → Reviews → Cart, then eventually to Checkout.

🧩 Why This Matters

Using these Markov transition weights, your bot:

- Mimics not just the structure of user flows, but the tendencies and behavioral patterns.

- Moves beyond randomness to something closer to cognitive modeling—how real people think and browse.

Would you like:

- A simulation of a few sample browsing sessions?

- This graph exported as an image or embedded in a Markdown/HTML snippet for your article?

Leading Open-Source Libraries & Case Studies

🐍 Python

Pydoll — Asynchronous browser automation that simulates human mouse and delay patterns via Chrome DevTools.

Emunium — Adds humanized delays, clicks, movements, and typing to Selenium, Pyppeteer, or Playwright.

Botasaurus — Scraping framework designed to evade anti-bot systems by modeling human interactions.

🟩 Node.js

puppeteer-humanize — Randomizes typing, injects errors and pauses into Puppeteer automation.

ghost-cursor — Realistic mouse trajectory generation.

Oxymouse — Advanced mouse movement modeling (Bézier, Perlin noise, Gaussian distribution).

Practical Applications

- Stealth web scraping (bypassing Cloudflare, PerimeterX, etc.)

- Robust QA automation that mimics real user behavior for thorough testing.

- Creating undetectable bots for marketing, growth hacking, or workflow automation.

Key Academic References

Tang et al., Psychonomic Bulletin & Review 2018 — Fitts’s Law in pointer movement modeling.

Barabási, Nature 2005 — Bursty Human Dynamics: explains why human action delays are heavy-tailed.

Nahuel González et al., Heliyon 2021 — Log-normal & log-logistic fits for typing timings.

Liang et al., NOMS 2002 — Modeling web sessions with lognormal think times and Gaussian burst lengths.

Beyond the Algorithm: The Artistry of Human-Like Automation👩💻✨

Human-like automation is not just about randomizing delays—it’s about deploying realistic statistical models, organic mouse trajectories, and error-prone, variable typing patterns. The best open-source libraries already implement many of these principles, making them the perfect foundation for your own stealth automation stack.

Ready to go beyond the code and into the creative craft of automation? At Tenmei.tech, we see each high-quality, human-like bot as a masterpiece—where engineering meets intuition, and every detail is part of a living digital performance. If you want to experience the real artistry behind undetectable automation, explore our deep dives, hands-on code samples, and comparative insights. Let’s create bots that move, improvise, and win—not just with technology, but with the elegance of true digital artistry.

✨ Let’s build the future together. Let Tenmei.tech be your trusted guide into a new era of intelligent automation—where bold ideas become breakthrough systems, and code becomes an instrument of power and creativity.

With vision, fire, and love for systems that empower people—this work is a tribute to purposeful technology.

✨🍷🗿 Co-created by Mei and Ricardo | © 2025 Tenmei.tech | All rights Reserved

For consulting or collaboration inquiries, feel free to reach out through the contact section.

Ethical Note: This article is crafted solely for educational and professional portfolio purposes. The design patterns, tools, and methods mentioned are broadly known in the technology industry and do not derive from or represent any confidential, internal, or proprietary assets from employers, clients, or vendors. Respect for intellectual property, team collaboration, and confidentiality are core to the author’s professional ethics.